大量の MCX755106AC-HEAT 製品が必要な場合は、フリーダイヤル Whatsapp: (+86) 151-0113-5020 までお電話いただくか、ライブチャットでお見積もりをご依頼ください。担当営業マネージャーがすぐにご連絡いたします。

Title

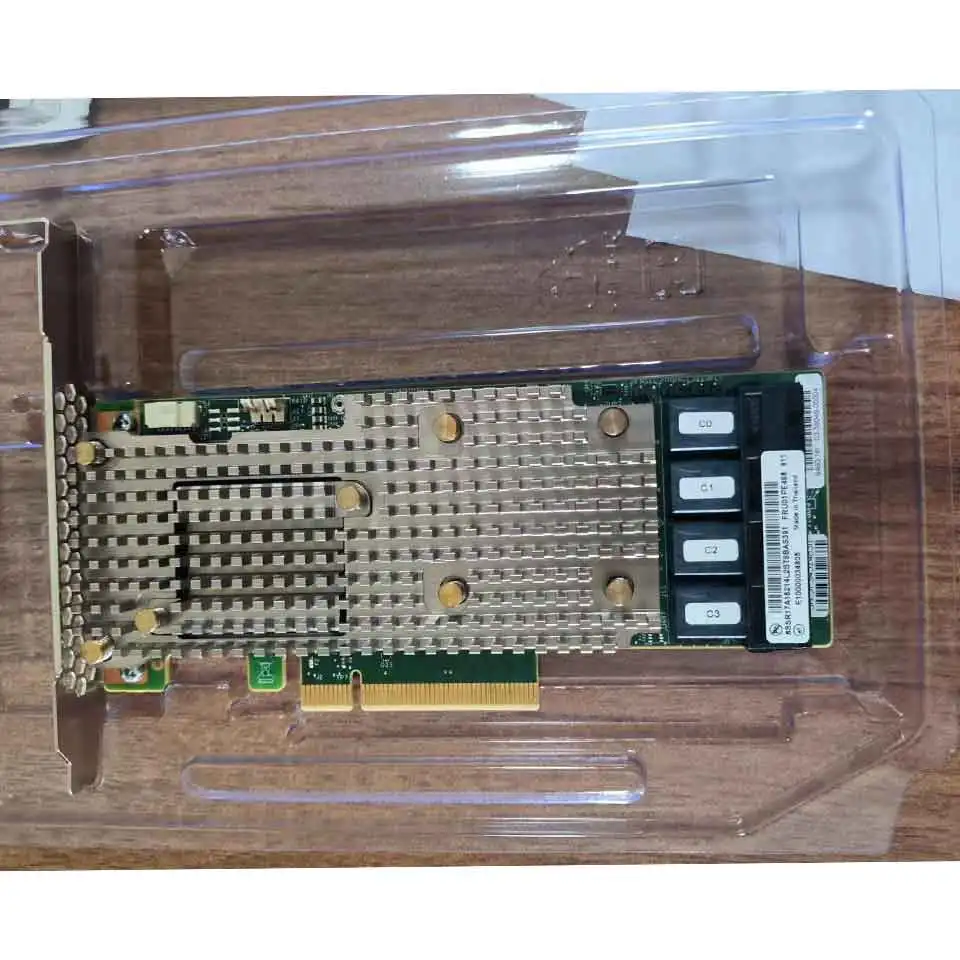

NVIDIA ConnectX-7 MCX755106AC-HEAT Dual-Port 200GbE / NDR200 InfiniBand Network Adapter

Keywords

NVIDIA ConnectX-7, MCX755106AC-HEAT, 200Gb Ethernet, NDR200 InfiniBand, dual-port QSFP112, PCIe5 x16 NIC, RoCE/RDMA networking, data center NICDescription

The MCX755106AC-HEAT is a high-performance network interface card from NVIDIA’s ConnectX-7 family, combining both 200Gb Ethernet and NDR200 InfiniBand capabilities in one dual-port QSFP112 adapter. It’s designed for data center, HPC, AI, cloud infrastructure, and high-throughput RDMA / RoCE traffic patterns.

This adapter fits a PCIe5 x16 interface, enabling very high bandwidth between host server and network fabric, especially under heavy loads. It supports Secure Boot and Crypto Enabled features, making it more suitable for environments where network security and tamper-resistance matter.

Form factor is half-height, half-length (HHHL), dimensions ~68.90 mm × 167.65 mm, meaning it fits a wide range of server chassis. It also has an auxiliary PCIe passive card option plus Cabline SA-II Plus harnesses, which expand compatibility for socket-direct or other deployment-specific cabling topologies.

Operating temperature range is 0-55 °C, with storage set from -40 to 70 °C, which is typical for enterprise NICs. Power draw is about 25.9 W (for the "AC" variant; "AS" variant slightly lower) when used with passive cables under PCIe Gen5 x16 mode.

Because it supports both Ethernet and InfiniBand modes, this NIC is flexible. You can use it for standard TCP/IP 200GbE networking or for high-performance RDMA over InfiniBand (NDR200), depending on network infrastructure. This duality is very useful in mixed-environment data centers.

Key Features

- Dual ports of QSFP112 supporting both 200GbE and NDR200 InfiniBand modes.

- High-speed PCIe Gen5 x16 host interface for maximum throughput, backward compatible with PCIe4/3.

- Secure Boot & Crypto Enabled (AC variant) for enhanced security in networking.

- RoCE/RDMA support for low latency, high throughput in data center / HPC workloads.

- Auxiliary PCIe extension option via passive card + Cabline SA-II Plus harnesses allows socket-direct style deployment.

- Compact HHHL form factor with heatsink (HEAT version), suitable for most standard server racks.

- Low power (~25.9W typical under load) for its class, reducing thermal and power overhead per port).

Configuration

| Component | Specification / Detail |

|---|---|

| Part Number / Model | MCX755106AC-HEAT (NVIDIA ConnectX-7) |

| Ports | 2 × QSFP112 (dual-port) |

| Network Types | Ethernet (200/100/50/25/10GbE), InfiniBand (NDR200/HDR/HDR100 etc.) |

| Host Interface | PCIe5 x16 (also backward support for PCIe4/3 via SERDES 16/32 GT/s) |

| Power Consumption | ~25.9W (AC variant) ≈24.9W for AS variant under passive cable / typical load |

| Physical Size | Half-Height Half-Length, ~68.90 mm × 167.65 mm |

| Operating Temp | 0-55 °C (operational) / -40-70 °C (storage) |

| Special Features | Secure Boot, Crypto Enabled, Socket-Direct passive cable option |

| Compatibility Modes | RoCE, RDMA, Ethernet, InfiniBand |

Compatibility

The MCX755106AC-HEAT works with any server motherboard with a free PCIe x16 slot, preferably supporting PCIe5 to achieve full speed. Servers with PCIe4 will run lower speeds but are compatible. Ensure sufficient cooling and proper bracket height (tall / HHHL) to fit heatsink.

For network connectivity, you must have compatible QSFP112 ports on your network switches or use optical / DAC/CR4/QSFP112 optical transceivers that match the cable type. For InfiniBand mode, your switch must support NDR200 or HDR etc. For Ethernet mode, switches must support 200GbE or lower fallback speeds.

Drivers and firmware should be current: NVIDIA’s ConnectX-7 driver stack (for your OS — Linux, Windows, etc.) plus firmware that supports Secure Boot / Crypto features. Missing updates may limit performance or features (e.g. passive adapter harness option).

Power budget: because the NIC consumes ~25-26W under typical loads with passive cables, ensure the server PSU and airflow can handle this plus any adjacent NICs and cards. Heatsink (HEAT) version will generate some thermal load; airflow at appropriate speeds is necessary.

Usage Scenarios

1) High-Performance Compute / AI Cluster Fabrics: Great for interconnect in GPU/CPU clusters needing very high bandwidth and low latency, especially where RDMA or InfiniBand fabrics are used (NDR200) to avoid network bottlenecks.

2) Data Center Ethernet Backbone: Use it for 200GbE switches/routers interconnect, server uplinks, or leaf/spine architecture where high throughput is required.

3) RoCE / RDMA for Storage Systems: For NVMe-over-Fabric, or distributed storage systems like Ceph or Lustre, or other systems using RDMA, this NIC accelerates storage access by reducing latency and CPU overhead.

4) AI/ML / HPC Inference & Training: Use in inference serving nodes or training nodes where large model weights are shared, reducing data movement overhead across nodes). Also helpful in virtualization of GPU workloads.

5) Secure / Compliance Environments: Because Secure Boot and Crypto Enabled are supported, this card is appropriate where network encryption, trusted boot, and compliance (e.g. for financial, government, or healthcare systems) rank high in requirement.

Frequently Asked Questions

-

Q: Does MCX755106AC-HEAT require PCIe Gen5 to get full 200Gbps performance, or will it work with Gen4 as well?

A: It will work with PCIe Gen4 and even Gen3 (depending on host), but to reach full advertised throughput (especially for both ports and maximum lane count), PCIe5 x16 is preferred. Gen4 will likely impose some bandwidth ceiling. -

Q: Can this adapter operate in both Ethernet 200GbE mode and InfiniBand NDR200 mode interchangeably?

A: Yes — the MCX755106AC-HEAT supports both Ethernet and InfiniBand (NDR200) modes. Mode selection depends on switch/fabric configuration and firmware. It is dual-mode and highly flexible. -

Q: What cabling or transceivers are needed to make full use of its QSFP112 ports?

A: You’ll need optical or copper QSFP112/CR4/CR8 cables/transceivers rated for 200Gb/s or fallback levels (100/50/25/10Gb/s). Passive cables may limit reach; check cable spec. Also if using passive cabling, be sure the port and NIC both support that mode. -

Q: What are the thermal and mechanical requirements for the HEAT version?

A: HEAT version includes heatsink, so it needs good airflow within the server enclosure. Confirm that the server’s PCIe slot bracket height accommodates tall bracket / heatsink. Also verify power budget—~25.9W under load plus any auxiliary cabling/harness.

この商品に関連する製品

-

Lenovo ThinkSystem SR650 V3 2U 8x2.5 インチ AnyBay バッ... - 品番: 4XH7A82913...

- 在庫状況:In Stock

- 状態:新品

- 定価:$699.00

- 販売価格: $490.00

- 節約額 $209.00

- 今すぐチャット メールを送信

-

Dell Unity ストレージ システム用エンタープライズ ファイバ チャネル接続アップグレード ... - 品番: 405-ABBH...

- 在庫状況:In Stock

- 状態:新品

- 定価:$3,299.00

- 販売価格: $2,499.00

- 節約額 $800.00

- 今すぐチャット メールを送信

-

Dell EMC UNITY XT D4122F 2U 25×2.5インチDAE(12 GB/S S... - 品番: D4122F...

- 在庫状況:In Stock

- 状態:新品

- 定価:$3,488.00

- 販売価格: $2,875.00

- 節約額 $613.00

- 今すぐチャット メールを送信

-

nvidia connectx-7 mcx755106ac-heat dual-port200gbe... - 品番: MCX755106AC-HEAT...

- 在庫状況:In Stock

- 状態:新品

- 定価:$1,999.00

- 販売価格: $1,650.00

- 節約額 $349.00

- 今すぐチャット メールを送信

-

NVIDIA H200 NVL 141GB PCIE GPUアクセラレータ(部品番号900-2101... - 品番: NVIDIA H200 NVL 141G...

- 在庫状況:In Stock

- 状態:新品

- 定価:$39,999.00

- 販売価格: $30,715.00

- 節約額 $9,284.00

- 今すぐチャット メールを送信

-

HPEスマートメモリキットP06035-B21 - 64 GB DDR4-3200 ProLia... - 品番: P06035-B21...

- 在庫状況:In Stock

- 状態:新品

- 定価:$599.00

- 販売価格: $443.00

- 節約額 $156.00

- 今すぐチャット メールを送信

-

Lenovo ThinkSystem SR665サーバーマザーボード - 互換モデル:03GX157... - 品番: 03GX789...

- 在庫状況:In Stock

- 状態:新品

- 定価:$1,899.00

- 販売価格: $1,638.00

- 節約額 $261.00

- 今すぐチャット メールを送信

-

Lenovo 01PF160 -ThinkSystem SR850 SystemBoard Gen2... - 品番: 01PF160...

- 在庫状況:In Stock

- 状態:新品

- 定価:$3,199.00

- 販売価格: $3,099.00

- 節約額 $100.00

- 今すぐチャット メールを送信